17. July 2024 By Luca Camphuisen

CloudNative-PG in the homelab with Longhorn

In my first blog, also published on Medium, I will showcase my solution on running cloud-native PostgreSQL on Kubernetes (K3s) with Longhorn storage.

Update as of July 2nd 2024: I have added the “Drain nodes with CloudNative-PG volumes” section due to issues I discovered when upgrading from Ubuntu 22.04 to 24.04.

As a software engineer who is into both Kubernetes and homelabs, one of the challenges is managing stateful data. A popular phrase in the ecosystem is:

Cattle, not pets

I believe that this is true to some extent but somewhere you still need to persist your data. I have thought about using separate VMs for databases but I really wanted the capabilities that Kubernetes brings and disliked the overhead that VMs bring. When I first began my Kubernetes journey I was just using a Helm chart for PostgreSQL and only had a single pod/replica (a pet, following our previous analogy), I quickly found out just deploying a PostgreSQL chart with Longhorn storage will break your database in no time. Upgrading the PostgreSQL versions also was not fun and caused downtime (not too important in a homelab but still not nice).

I then decided to try out CloudNative-PG. CloudNative-PG is a Kubernetes operator that can manage the lifecycle of your database. You create a “Cluster” resource in yaml and then the operator will manage the database for you. It is also able to perform periodic backups and is able to restore from various kinds of backed up data when configured correctly.

Ubuntu & Longhorn

My homelab Kubernetes cluster currently runs on Ubuntu server 22.04 VMs (upgrading to 24.04 but am also looking into Talos). I am using K3s and only have NVMe storage. Even before using CloudNative-PG I had experienced some issues with Longhorn. I had various volume mounting errors and did not have a smooth experience.

I managed to resolve these issues by creating a /etc/multipath.conf configuration file on the Kubernetes nodes as described in the related Longhorn troubleshooting page. Grasping/explaining multipath is pretty difficult and I do not want to explain it in this blog. I would recommend reading the Wikipedia page for a quick summary.

Create /etc/multipath.conf and write the following contents:

blacklist {

devnode "^sd[a-z0-9]+"

}

Then restart the multipathd service and Longhorn should not have these mounting issues anymore.

To handle node failures, the draining of nodes, and database replica failover I have also changed some default Longhorn configurations. I have installed Longhorn as Helm chart using ArgoCD GitOps. These are some of the values I have configured:

defaultSettings:

orphanAutoDeletion: false

autoDeletePodWhenVolumeDetachedUnexpectedly: true

nodeDownPodDeletionPolicy: delete-both-statefulset-and-deployment-pod

nodeDrainPolicy: always-allow

These options can also be configured within the Longhorn web UI settings page. The “nodeDrainPolicy” field is described in the “Drain nodes with CloudNative-PG volumes” section. It is required to drain nodes that have the last/only replica of a Longhorn volume. You could set it to the default value and only use “always-allow” when having to drain nodes.

Custom Longhorn storage class

Since CloudNative-PG uses application-level replication, we do not want to also replicate the data in Longhorn. If we have 3 database replicas, that would mean we have 9 copies of the data when using the default 3 replicas in Longhorn. We would really like a single copy of the data per database replica.

Another thing that broke my databases when I first began using CloudNative-PG was the scheduling of the pods in the cluster. Kubernetes would schedule a database pod on a node, Longhorn would try to mount the volume to the pod, but the pod would be stuck on initialising because it could not mount. This was caused by the default volume binding mode. The Immediate volume binding mode does not take the pod scheduling requirements into consideration. One of the scheduling requirements we would like is that the pod is placed on the same node as where the volume resides.

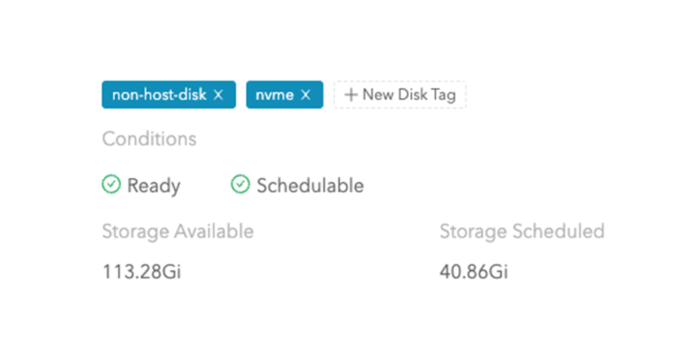

Within the Longhorn web UI I also added an “nvme” storage tag to the disks which makes it possible to select which storage medium we want for our applications. Go to the “Node” page at the top menu, select a node and click “Edit node and disks” on the right side (dropdown menu) and apply your relevant tags:

Part of the “Edit Node and Disks” menu where you manage disk tags.

After adding the tags to each disk I came up with the following storage class:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: longhorn-postgres-replica-storage

annotations:

storageclass.kubernetes.io/is-default-class: "false"

provisioner: driver.longhorn.io

allowVolumeExpansion: true

# WaitForFirstConsumer mode will delay the binding and provisioning of a PersistentVolume until a Pod using the PersistentVolumeClaim is created.

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

parameters:

numberOfReplicas: "1"

staleReplicaTimeout: "1440" # 1 day

fsType: "ext4"

diskSelector: "nvme"

dataLocality: "strict-local"

recurringJobSelector: '[

{

"name":"postgres-replica-storage",

"isGroup":true

}

]'

In this storage class we specify that we want NVMe storage (if you have lower bandwidth/iops requirements you could for example choose HDD), we only want one replica of the data, the pod must be scheduled on the same node as where the volume resides, we change the binding mode to WaitForFirstConsumer, and I added a job selector. This selector can be used to create a backup job within Longhorn (backup all volumes with X selector). I do not personally use the Longhorn backup feature for my PostgreSQL since I backup using the CloudNative-PG backup CRD’s, but I can imagine that it could be useful. Have a look at all available Longhorn storage class parameters for more information.

When having multiple types of storage, it may be useful to create a second storage class for the WAL (write ahead log) data. I only have NVMe storage in my cluster (all the same speed) so I just use the same storage class for both WAL and DB data.

Creating your database

I am currently running databases for various services in my cluster.

A couple of examples are: Keycloak, Harbor, Gitea, my own projects. Most of these are created from a backup I did since I tend to sometimes nuke my services by having a wrongly indented yaml file (need to improve my GitHub Actions and Argo CD integration). I will provide an example database resource together with the periodic backup:

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: iam-db

labels:

app.kubernetes.io/component: database

app.kubernetes.io/version: 16.3-bullseye

spec:

instances: 3

description: "Keycloak DB"

imageName: "ghcr.io/cloudnative-pg/postgresql:16.3-bullseye"

primaryUpdateStrategy: unsupervised

bootstrap:

initdb:

database: iam

owner: iam

secret:

name: db-credentials

# configure storage types used

storage:

storageClass: longhorn-postgres-replica-storage

size: 2Gi

walStorage:

storageClass: longhorn-postgres-replica-storage

size: 2Gi

# prometheus

monitoring:

enablePodMonitor: true

# see https://cloudnative-pg.io/documentation/1.22/kubernetes_upgrade/

nodeMaintenanceWindow:

reusePVC: false # rebuild from other replica instead

backup:

retentionPolicy: "60d"

barmanObjectStore:

destinationPath: s3://databases/iam-db

endpointURL: https://your-s3-minio-or-aws-url

s3Credentials:

accessKeyId:

name: barman-credentials

key: access-key-id

secretAccessKey:

name: barman-credentials

key: secret-access-key

wal:

compression: gzip # this saves a lot of storage

data:

compression: gzip

jobs: 2

The above yaml is not perfect but works great in a homelab environment. I have been running my Keycloak database this way and have not had any big issues so far (8+ months of PostgreSQL upgrading, usage, node failures). I will not explain everything in the yaml so please read the CloudNative-PG docs. They are very extensive and they also have a great community on slack.

The “db-credentials” and “barman-credentials” values reference Kubernetes secrets. Do ensure that “db-credentials” is of type “kubernetes.io/basic-auth” instead of being a generic secret.

I have also been experimenting with certificate-based auth. Instead of using the CloudNative-PG Krew plugin to manage certificates I have integrated cert-manager to manage the certificate lifecycles (since I only apply changes using Argo CD / easier to do using cert-manager). Please let me know if you would like a post about configuring PostgreSQL/CloudNative-PG with certificates and connecting to the database in Spring Boot and/or with IntelliJ.

Periodic backups

Creating scheduled backups is very easy with CloudNative-PG. Just create a “ScheduledBackup” resource like this:

apiVersion: postgresql.cnpg.io/v1

kind: ScheduledBackup

metadata:

name: db-scheduled-backup

labels:

app.kubernetes.io/component: database-backup

spec:

schedule: "0 0 23/12 * * *" # At 0 minutes past the hour, every 12 hours, starting at 11:00 PM

backupOwnerReference: none

immediate: false

cluster:

name: "iam-db" # Your Cluster resource name

The backupOwnerReference sets the owner of each backup that is made. You can also set it to “self” or “cluster”. Please reference the documentation on backups for more information.

Backup recovery

Recovering a database from a backup is fairly easy. You need to create a new “Cluster” resource and instead of bootstrapping a new DB from scratch, you bootstrap from a database backup resource:

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: iam-db

labels:

app.kubernetes.io/component: database

app.kubernetes.io/version: 16.3-bullseye

spec:

instances: 3

description: "Keycloak DB"

imageName: "ghcr.io/cloudnative-pg/postgresql:16.3-bullseye"

primaryUpdateStrategy: unsupervised

bootstrap:

recovery:

owner: keycloak

secret:

name: db-credentials

database: keycloak

backup:

name: your-backup-resource-name

# configure storage types used

storage:

storageClass: longhorn-postgres-replica-storage

size: 2Gi

walStorage:

storageClass: longhorn-postgres-replica-storage

size: 2Gi

# prometheus

monitoring:

enablePodMonitor: true

# see https://cloudnative-pg.io/documentation/1.22/kubernetes_upgrade/

nodeMaintenanceWindow:

reusePVC: false # rebuild from other replica instead

backup:

retentionPolicy: "60d"

barmanObjectStore:

destinationPath: s3://databases/iam-db

endpointURL: https://your-s3-minio-or-aws-url

s3Credentials:

accessKeyId:

name: barman-credentials

key: access-key-id

secretAccessKey:

name: barman-credentials

key: secret-access-key

wal:

compression: gzip

data:

compression: gzip

jobs: 2

Drain nodes with CloudNative-PG volumes

When upgrading my Kubernetes host VMs from Ubuntu server 22.04 to 24.04 I experienced several issues related to Longhorn and CloudNative-PG.

My usual host OS upgrade process consists of draining a node, then applying the upgrades, rebooting the node, and finally uncordoning the node.

When attempting the upgrade to Ubuntu server 24.04 I first experienced some common issues related to PodDisruptionBudget configurations not allowing the node to drain (not related to CloudNative-PG).

After fixing those issues, the nodes still would not drain correctly. This was caused by Longhorn not allowing a node to drain if it contains the only replica left of a volume.

The StorageClass that we use for our CloudNative-PG databases only has one replica configured per volume which means Longhorn prevents the node from draining. To solve this you can (temporarily) configure the “Node Drain Policy” in Longhorn to “always-allow”. Go to the Longhorn web UI and navigate to the settings page.

If you use GitOps you could also set the “defaultSettings.nodeDrainPolicy” to “always-allow” (when installing using Helm).

We can now successfully drain a node even when it contains a CloudNative-PG volume. Before draining though there is one step left: we need to put the CloudNative-PG “Cluster” resources into node maintenance mode. Edit your “Cluster” resource as follows:

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: iam-db

labels:

app.kubernetes.io/component: database

app.kubernetes.io/version: 16.3-bullseye

spec:

...

nodeMaintenanceWindow:

inProgress: true # add this field

...

Apply the changes and now CloudNative-PG will failover by creating a new DB replica together with a new PVC.

To then drain a node I have used the following command:

kubectl drain <NODE-NAME> --ignore-daemonsets --delete-emptydir-data --grace-period=360

You may also verify if all pods are evicted from the node. Only pods controlled by DaemonSets should still be running:

kubectl get pods --all-namespaces --field-selector spec.nodeName=<NODE-NAME>

Thanks for reading my first blog! Feel free to connect with me on LinkedIn. Special shoutout to Matteo Bianchi for providing feedback on my first post!